Beginner’s Guide to No-Code Web Scraping: Easy Tools and Techniques

Feeling stuck with no code web scraping? Then you’ve come to the right place.

I too used to struggle so much as a beginner, so trust me when I say I know what you are going through.

Want no-code automation tips and secrets? I got you covered.

Subscribe to my newsletter. Don’t worry. I can’t code either.

Now I’m at the point where I turn to Make.com for any kind of web scraping because it’s extremely powerful and versatile, so much so that it comes with a somewhat steep learning curve, meaning Make.com isn’t really for people just getting started with no code web scraping.

Luckily though, there are tools out there made solely for web scraping. And these tools are exactly where I started and it sure brought me where I am today.

So in this post, I’ll walk you through some of them. Getting the hang of these tools will definitely teach you the basics of web scraping, which you will need down the road when building complicated workflows.

So, without a further ado, let’s dive in!

Currently, this post covers two solutions (I hope to add some more in the future):

- Simplescraper

- Native formula of Google Sheets

Simplescraper

Simplescraper is a Chrome extension-based tool that lets you visually specify what you want to scrape from web pages. Get started by installing the Chrome extension here.

Simplescraper: demo

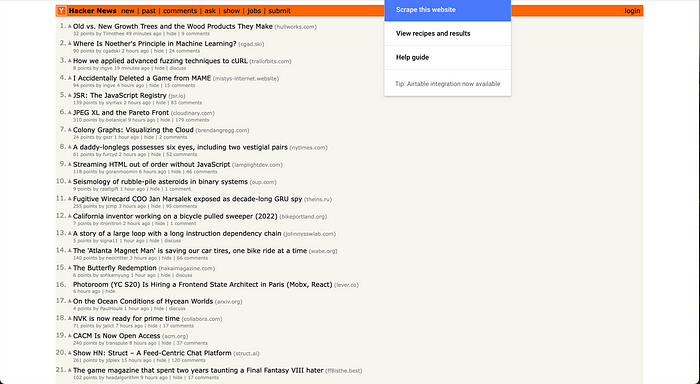

After installing the extension, let’s head over to Hacker News for this tutorial. We’re going to scrape some content from this page.

Click the Simplescraper icon in your extension bar. Now, you should be seeing this screen below. Let’s hit ‘Scrape this website’.

We’re going to web scrape Hacker News, but we need to break down the process into the following two:

- Scrape a list of links

- Scrape content from the list of links from №1

It’s pretty handy to remember these two steps because no matter how skilled you are, there will always be (1) collecting of links and (2) scraping content from those links.

Now, back to Hacker News. Let’s hit ‘Add a property’.

After that, let’s click on the post titles. They will be highlighted in green.

Let’s press ☑️, then VIEW RESULTS button.

Voila! A Simplescraper tab opens up where you can see the result.

Let’s hit “Download CSV” and open it with the tool of your choice (Google Sheets, Excel, Numbers, etc.)

Once done, let’s open one of the links we have just scraped. From here, we’ll turn to Simplescraper again. Click on the extension, then select the element to scrape. In this tutorial, the links we have do not share the same HTML structure because you can basically post any kind of link on Hacker News.

However, there is one thing that they have in common: ‘body’, meaning the entire HTML of the webpages. So try clicking on an area that makes the entire screen green like so:

Let’s hit ☑️ and press ‘VIEW RESULTS’.

What did we just do? We only scraped one of the many links we have. Do we have to open all the links manually and do the same over and over…?

Of course not! By scraping one link, we have actually created a template that Simplescraper can follow and do the same for other URLs. Do you see this green banner on your result page? It says “Want cloud scraping, multiple pages or to build an API? Click here to save this recipe”.

What it’s saying is “Do you have more links to scrape from? We can create a template (recipe) and reuse it for other links. Oh by the way, Simplescraper will take care of it in the backend (cloud scraping) so you don’t have to have windows open”.

This sounds like what we’re after. Let’s hit the green banner. Doing so will take you to this page below. Adjust the recipe name and everything if you want, then scroll all the way down and press ‘Create recipe’.

Now that the recipe is created, let’s head over to it. The navigation on the left side shows you the list of recipes you created, so click on the one you have just created and hit Crawl. This is where you iterate over the many links you have.

Now, enter all the links into the input field. Simplescraper will auto-save your links, and you are ready to go.

Let’s hit ‘Run recipe’.

The time it takes for Simplescraper to finish the task depends on the number of links you feed it, but once it’s done, click on the ‘Results’ tab. You will see all the scraped content there.

Scroll down to the bottom and hit “Download CSV”, and open it in Google Sheets (or any tool you prefer).

And just like that, you have successfully scraped through many web pages. What you’ll do with the content is up to your imagination!

That wraps up the Simplescraper demo / tutorial. I love how they make it so easy for beginners! One thing I should mention, though, is that you cannot always choose the right element using Simplescraper. Not sure if this is a glitch or not, but I find that a bit frustrating.

That being said, it’s still a great tool to get started with no code web scraping!

Google Sheets

Surprisingly, you can perform web scraping solely with Google Sheets. Of course, there is a limit on how much it can process and all that, if you just looking to do quick data extraction, use Google Sheets!

Google Sheets: demo

So, I uploaded the CSV file from the Simplescraper demo / tutorial. It’s basically a list of post titles and corresponding links. I added a Title column and Body column.

To scrape these elements from the links, we’ll use a function called IMPORTXML. It should look something like below:

= IMPORTXML(B2, "//title")= IMPORTXML(B2, "//body")

The text you see as “//title” and “//text” is called XPath. It could be as simple as these two, but you can also make it much more complicated depending on how deep into the HTML structure you are going into. Don’t worry. You don’t have to be able to write XPath. Just give the element to ChatGPT and it will tell you the XPath to use. Let’s give it a try.

Just copy and paste the XPath and fill the IMPORTXML formula with it. I should mention, however, that not all XPaths works as we expect in Google Sheets. If it gives you #N/A, ask ChatGPT to generate a different XPath, or give it more context, meaning copy larger part of the HTML that contains the actual element you are after and one or two parent elements.

Let’s go gack to the Google Sheets. If you apply the formulas everywhere, it starts fetching but it takes some time. This is the limitation of web scraping using Google Sheets. You cannot really do anything on a very large scale. But if you look at the bright side, it’s so quick and handy!

I started seeing some #N/As, so let’s change the formulas to the following:

= IFERROR(IMPORTXML(B2, "//title"), "")= IFERROR(IMPORTXML(B2, "//body"), "")This will give us something like this below:

Hmm, no more errors but the Body column is spanning across multiple cells. Let me adjust the formulas:

= TEXTJOIN("", 1, IFERROR(IMPORTXML(B2, "//body"), ""))I basically concatenated all the cells into one. And just like that, we have our scraped data!

Want to learn advanced no code web scraping?

Make.com allows you to perform more flexible web scraping. It’s my go-to tool for web scraping and all things automation. If you want to get started with it, head over to another article of mine below.